Discover how to enhance data visualization with AI, a transformative approach that elevates how we interpret and present complex data. Leveraging artificial intelligence enables the creation of more dynamic, insightful, and interactive visual representations, empowering users to uncover deeper insights and make informed decisions.

This guide explores the latest techniques, tools, and best practices for integrating AI into data visualization workflows. From advanced analysis methods to designing engaging dashboards, the content aims to equip you with the knowledge to harness AI’s full potential in transforming your data presentations.

Overview of Enhancing Data Visualization with AI

Artificial Intelligence (AI) has revolutionized the landscape of data visualization by enabling smarter, more dynamic, and insightful representations of complex datasets. Traditionally, data visualization relied heavily on manual design and static visuals, which often limited the ability to uncover deep insights quickly. The integration of AI transforms this paradigm, offering automated analysis, pattern recognition, and adaptive visualization techniques that cater to diverse user needs and complex data structures.

By harnessing AI, organizations can not only improve the clarity and efficiency of data presentation but also unlock hidden correlations and trends that might remain obscured in traditional methods. This evolution enhances decision-making processes, fosters more interactive experiences, and supports real-time data analysis, making data visualization a more powerful tool for strategic insights and operational intelligence.

The Role of Artificial Intelligence in Transforming Traditional Data Visualization Techniques

Artificial Intelligence elevates conventional data visualization by automating tasks such as data cleaning, feature detection, and anomaly identification. Machine learning algorithms can analyze vast datasets to identify significant patterns that inform visualization design, such as clustering similar data points or highlighting outliers. Natural Language Processing (NLP) enables the creation of conversational interfaces, allowing users to query data in natural language and receive visual responses in real-time.

AI-driven techniques also facilitate the customization of visualizations based on user preferences and context, making the data more accessible and actionable. For example, AI-powered dashboards can adapt in real-time to changing data trends, ensuring that users always view the most relevant information without manual intervention. These capabilities significantly enhance the precision, responsiveness, and depth of insights derived from data visualization.

Benefits of Integrating AI into Data Visualization Workflows

The integration of AI into data visualization workflows offers numerous advantages that substantially improve the analytical process:

- Enhanced Data Analysis: AI algorithms can automatically detect patterns, trends, and anomalies, reducing the manual effort required and increasing accuracy.

- Improved User Experience: AI enables interactive and intuitive visualizations, such as chatbots and voice-activated interfaces, making data more accessible to non-experts.

- Real-Time Insights: AI facilitates live data processing and visualization, allowing organizations to respond swiftly to emerging issues or opportunities.

- Automation and Scalability: Automated processes enable handling large and complex datasets efficiently, scaling visualization efforts without proportional increases in resource requirements.

- Customization and Personalization: AI tailors visualizations to suit individual user needs, preferences, and roles, enhancing relevance and comprehension.

For instance, financial firms utilize AI-enhanced dashboards to monitor market fluctuations in real-time, automatically highlighting critical changes and forecasting future trends based on historical data. Similarly, healthcare providers employ AI-driven visualizations to track patient outcomes and identify potential risks proactively.

Evolution of Data Visualization with Advancements in AI Technology

The trajectory of data visualization has been significantly shaped by rapid advancements in AI technology, transitioning from static charts to highly interactive, intelligent visual tools. Early AI applications focused on automating data preprocessing and basic pattern recognition, which laid the groundwork for more sophisticated visualization techniques.

Recent developments include the integration of deep learning models that enable feature extraction from unstructured data like images, text, and videos, broadening the scope of data visualization. Additionally, the advent of AI-powered visualization tools provides functionalities such as predictive analytics, anomaly detection, and natural language querying, making complex data accessible to a wider audience.

As AI continues to evolve, the future of data visualization promises increasingly immersive and personalized experiences, leveraging technologies like augmented reality (AR), virtual reality (VR), and advanced neural networks to interpret and present data in ways that were previously unimaginable.

Examples of this evolution can be seen in sectors such as manufacturing, where AI-driven visualization systems monitor industrial processes in real-time and predict maintenance needs, or in urban planning, where AI models visualize complex city data to optimize infrastructure development and resource allocation. These advancements underscore the ongoing transformation of data visualization into an intelligent, interactive, and highly insightful discipline driven by AI innovation.

AI-Powered Data Analysis Techniques for Visualization

Leveraging artificial intelligence in data visualization enhances the ability to uncover actionable insights with greater accuracy and efficiency. By applying advanced machine learning algorithms and natural language processing, data professionals can identify intricate patterns and generate descriptive narratives that make complex data more accessible. Additionally, automating data clustering and segmentation streamlines the visualization process, enabling clearer, more meaningful representations of large datasets.

This section explores the core methods employed in AI-driven data analysis to optimize visualization outcomes, focusing on pattern recognition, natural language insights, and automated data segmentation.

Applying Machine Learning Algorithms to Identify Patterns in Data

Machine learning algorithms have transformed data analysis by automating the detection of complex patterns that might be overlooked through traditional methods. These techniques facilitate the segmentation of data based on similarities, trends, and anomalies, providing a foundation for compelling visual representations.

Common approaches include supervised learning models such as regression analysis and classification algorithms, which help predict outcomes and categorize data points effectively. Unsupervised learning methods like clustering algorithms—K-Means, hierarchical clustering, and DBSCAN—are particularly valuable for revealing inherent groupings within data without pre-labeled categories.

“Clustering algorithms enable visualizations that highlight natural groupings, revealing relationships and structures previously unnoticed.”

For example, in customer segmentation, machine learning can automatically group consumers based on purchasing behaviors, demographics, and engagement levels. Visual tools such as scatter plots or heatmaps then depict these clusters, enabling targeted marketing strategies and personalized experiences.

Utilizing Natural Language Processing to Generate Descriptive Insights

Natural Language Processing (NLP) enhances data visualization by transforming raw data into human-readable insights. This approach extracts s, summarizes large text datasets, and generates narrative descriptions that accompany visual representations, making data more interpretable for diverse audiences.

Techniques such as sentiment analysis, topic modeling, and text summarization are employed to analyze textual data sources, including social media comments, survey responses, and news articles. Once processed, NLP tools can produce descriptive summaries that highlight key patterns or trends, enriching the visualization with contextual understanding.

- Sentiment Analysis: Identifies positive, negative, or neutral sentiments to gauge public opinion or customer satisfaction, visualized through color-coded charts or dashboards.

- Topic Modeling: Groups related terms into themes, allowing visual representations like word clouds or cluster maps to showcase dominant subjects within datasets.

- Automated Summarization: Condenses lengthy reports or datasets into concise narratives, often integrated with dashboards to provide instant insights without manual interpretation.

This process enables decision-makers to grasp complex insights rapidly, supported by AI-generated textual summaries that complement visual data displays.

Tools for Automating Data Clustering and Segmentation

Automated clustering and segmentation tools leverage AI to organize data into meaningful groups, simplifying the visual complexity of large datasets. These tools facilitate the creation of clearer, more informative visualizations by reducing noise and highlighting key data structures.

Prominent tools and platforms include:

| Tool | Features | Use Cases |

|---|---|---|

| RapidMiner | Supports various clustering algorithms, intuitive user interface, integration with Python and R | Customer segmentation, fraud detection, market analysis |

| KNIME | Visual programming environment, extensive machine learning nodes, scalable | Data segmentation, anomaly detection, predictive modeling |

| Google Cloud AutoML | Automated machine learning workflows, scalable cloud infrastructure, customizable models | Image and text segmentation, pattern detection in large datasets |

These tools typically incorporate algorithms like K-Means, Gaussian mixture models, and density-based clustering, which automatically identify natural groupings in data. The resulting clusters can be visualized through scatter plots, dendrograms, or heatmaps, providing a clearer understanding of complex datasets and enabling more targeted decision-making.

Designing Interactive and Dynamic Visualizations with AI

Incorporating AI into data visualization transforms static displays into engaging, real-time interactive dashboards. These advanced visualizations enable users to explore datasets dynamically, gaining immediate insights and fostering more informed decision-making processes. The integration of AI not only enhances user experience but also ensures that visualizations remain relevant and updated in fast-paced environments, such as financial markets, healthcare monitoring, and operational analytics.

AI-driven visualization design involves leveraging algorithms that facilitate real-time data updates and user engagement features. By embedding predictive analytics, adaptive filters, and responsive interfaces, organizations can create dashboards that respond intuitively to user inputs and evolving data streams. This approach ensures that visualizations are both informative and interactive, empowering users to drill down into details, explore scenarios, and receive tailored insights seamlessly.

Enabling Real-Time Data Updates and Interactive Dashboards

Modern data visualization platforms can be enhanced with AI algorithms that automatically refresh data feeds, ensuring dashboards reflect the latest information without manual intervention. This real-time capability is crucial in contexts such as stock trading, where seconds can determine success, or in network security, where immediate threat detection is vital. AI models analyze streaming data continuously, filtering noise and highlighting anomalies or trends that require attention.

To develop an AI-powered real-time dashboard, follow these key procedures:

- Integrate Streaming Data Sources: Connect data streams from sensors, APIs, or databases using platforms like Apache Kafka or MQTT, ensuring continuous data flow.

- Implement AI Data Processing Modules: Use machine learning models, such as anomaly detection algorithms or predictive models, to process incoming data and identify significant events.

- Establish Automatic Refresh Cycles: Configure dashboard components to update at regular intervals or upon detection of critical changes, ensuring data freshness.

- Design User-Friendly Interfaces: Incorporate visual cues, such as flashing alerts or color changes, to notify users of significant updates promptly.

Incorporating AI-Driven Filters and User Engagement Features

User engagement can be significantly enhanced by integrating AI-generated filters and personalized controls. These features allow users to tailor visualizations to their specific interests and improve overall usability. AI algorithms can analyze user behavior and preferences, offering recommended filters or highlighting relevant data segments.

Steps to embed AI-driven filters and engagement functionalities include:

- Collect User Interaction Data: Monitor how users interact with visualizations to understand their preferences and typical queries.

- Develop AI-Based Recommendation Engines: Utilize collaborative filtering or content-based filtering techniques to suggest relevant filters or data views.

- Implement Adaptive Filtering Options: Enable filters that adjust dynamically based on user selections, showing related data subsets automatically.

- Enhance User Interface Design: Incorporate intuitive controls such as sliders, checkboxes, and auto-suggestive dropdowns that adapt to user behavior.

Embedding Predictive Analytics into Visual Interfaces

Embedding predictive analytics into visualizations empowers users to anticipate future trends and make proactive decisions. AI models such as time-series forecasting, regression analysis, or classification algorithms can be integrated directly into dashboards for seamless insights generation.

Procedures for embedding predictive analytics include:

- Select Appropriate Predictive Models: Choose models suited to the data type and prediction horizon, such as ARIMA for time-series forecasting or neural networks for complex pattern recognition.

- Integrate Models into Visualization Platforms: Embed predictive algorithms via APIs or SDKs provided by AI platforms like TensorFlow, PyTorch, or specialized BI tools with AI capabilities.

- Create Visual Elements for Predictions: Design charts, heatmaps, or gauges that display both current data and predicted outcomes, with indicators for confidence levels.

- Enable User Interaction with Predictions: Allow users to input scenario parameters or variables, enabling the system to generate customized forecasts dynamically.

Improving Visual Clarity and Insightfulness Using AI

Enhancing the clarity and depth of data visualizations is essential for effective communication of insights. Artificial Intelligence offers powerful techniques to refine visual representations, ensuring that key data points stand out and that viewers can interpret complex information with ease. By leveraging AI-driven methods, data analysts and designers can create visualizations that are not only visually appealing but also rich in meaningful insights.

Utilizing AI to improve visual clarity involves sophisticated algorithms that assist in feature selection, noise reduction, and aesthetic optimization. These techniques help highlight significant trends and patterns while minimizing distractions caused by irrelevant or misleading data. This section explores how AI can be instrumental in making data visualizations more precise, understandable, and insightful, ultimately enabling better decision-making processes.

AI-Assisted Feature Selection to Highlight Significant Data Points

Feature selection plays a crucial role in emphasizing the most relevant data within a visualization. AI algorithms can automatically identify and select features that contribute the most to the underlying patterns or outcomes. This process reduces dimensionality and prevents information overload, making visualizations clearer and more focused.

Techniques such as recursive feature elimination, mutual information scores, and deep learning-based attention mechanisms help pinpoint critical variables or data points. For example, in a sales dashboard, AI might highlight regions or products with the highest growth, ensuring that viewers focus on the most impactful elements. Additionally, AI can adjust visual emphasis—such as size, color, or position—to make these significant points stand out distinctly from less relevant data.

“AI-driven feature selection ensures that only the most influential data points are visually emphasized, streamlining the viewer’s interpretation.”

Reducing Noise and Outliers Through AI Algorithms

Real-world data often contains noise and outliers that can obscure meaningful trends. AI algorithms excel at filtering these unwanted elements without sacrificing the integrity of the data. Techniques like robust principal component analysis (RPCA), anomaly detection, and machine learning-based filtering help smooth data and remove distortions.

For instance, in visualizing customer feedback, AI can identify and exclude outlier responses that are significantly different from typical patterns, such as spam or erroneous entries. This cleaning process results in cleaner visualizations that accurately reflect the underlying data distribution. Moreover, AI can adaptively learn thresholds for outlier removal based on data context, ensuring that important rare events are preserved when necessary.

“AI algorithms improve the accuracy of visual insights by effectively distinguishing genuine data trends from noise and anomalies.”

Enhancing Color Schemes and Layout Designs for Better Comprehension

Color schemes and layout arrangements significantly influence how easily viewers interpret data visualizations. AI-driven tools can optimize these visual elements by analyzing data patterns and human perceptual preferences. This ensures that the visual design enhances clarity rather than causing confusion or misinterpretation.

Advanced AI techniques employ perceptual modeling and reinforcement learning to select harmonious color palettes that differentiate categories clearly and appeal aesthetically. Similarly, layout algorithms can automatically organize visual elements—such as positioning labels, adjusting spacing, and balancing visual weight—to improve readability and reduce clutter. For example, in complex dashboards, AI can determine the optimal placement of interactive filters or annotations to guide user focus effectively.

“AI-enhanced color and layout optimization fosters intuitive comprehension, allowing viewers to grasp complex data relationships effortlessly.”

AI Tools and Platforms for Data Visualization Enhancement

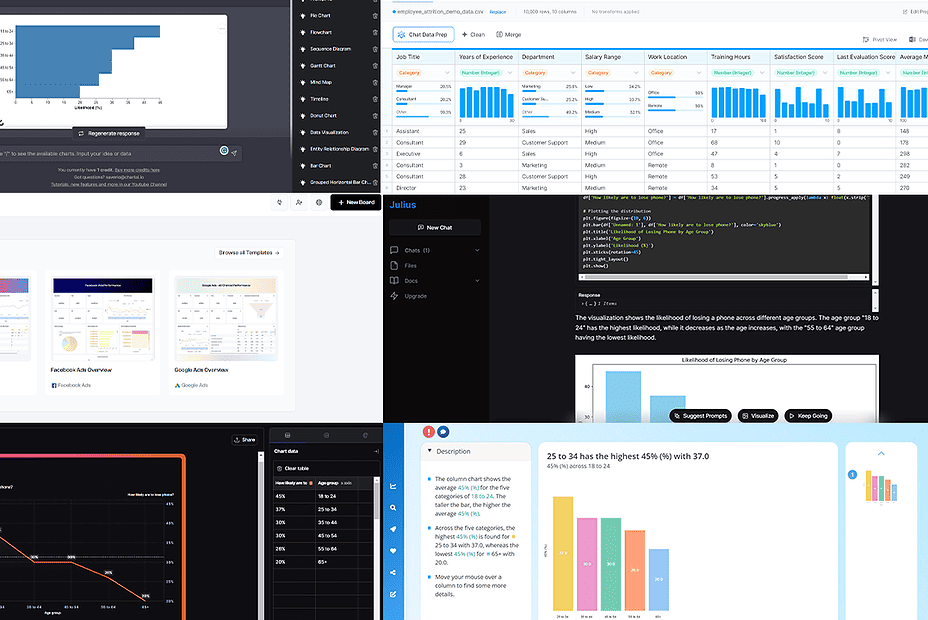

Advancements in artificial intelligence have led to a proliferation of specialized tools and platforms designed to augment data visualization processes. These AI-enabled solutions not only streamline the creation of insightful visualizations but also introduce capabilities such as automatic pattern recognition, predictive analytics, and interactive features that were previously labor-intensive. Understanding the landscape of these tools is essential for data professionals seeking to elevate their visualization strategies.

This section provides an overview of some of the most popular AI-powered data visualization platforms, their core features, and guidance on integrating AI APIs with existing visualization tools. Additionally, a comparative table highlights the capabilities, user-friendliness, and cost considerations of these platforms to facilitate informed decision-making.

Popular AI-Enabled Data Visualization Platforms and Their Key Features

Below is a selection of leading AI-driven visualization platforms, each distinguished by unique functionalities that cater to diverse analytical needs:

- Tableau with Einstein AI: Integrates Salesforce’s Einstein AI to automate insights, identify trends, and suggest optimal visualizations based on data patterns. It offers natural language querying and predictive capabilities within a familiar interface.

- Power BI with Azure AI: Leverages Azure Machine Learning for predictive analytics, anomaly detection, and text analytics. Its seamless integration enables users to embed AI insights directly into reports and dashboards.

- Qlik Sense with Insight Advisor: Features AI-driven insights through Insight Advisor, which guides users in exploring data, generating visualizations, and uncovering hidden relationships, fostering self-service analytics.

- IBM Cognos Analytics: Incorporates AI functionalities such as自动生成视觉, smart data prep, and conversational analytics, simplifying complex data analysis and visualization tasks.

These platforms are distinguished by their ability to automate complex analytical tasks, enhance visualization quality, and enable non-expert users to derive meaningful insights with minimal technical knowledge.

Integrating AI APIs with Existing Visualization Tools

For organizations seeking to augment their current visualization solutions with AI capabilities, integrating AI APIs is a strategic approach. This allows leveraging specialized AI services, such as natural language processing, image recognition, or predictive modeling, within familiar visualization environments.

Common integration methods include:

- RESTful API Calls: Many AI services offer REST APIs that can be called from within visualization tools or custom dashboards. Developers can embed these calls to fetch AI-generated insights or perform real-time data analysis.

- SDKs and Libraries: Some platforms provide SDKs (Software Development Kits) in popular programming languages such as Python, R, or JavaScript, enabling more seamless integration and automation.

- Plugins and Connectors: Certain visualization tools support plugins or connectors that facilitate direct integration with AI services, reducing development overhead and simplifying deployment.

It is essential to consider data security, API latency, and compatibility when integrating AI services to ensure a smooth user experience and reliable insights.

Comparison of AI Data Visualization Platforms

To assist in evaluating these tools based on capabilities, ease of use, and cost, the following comparison table summarizes key aspects:

| Platform | Capabilities | Ease of Use | Cost |

|---|---|---|---|

| Tableau with Einstein AI | Automated insights, predictive analytics, natural language queries | High — intuitive interface familiar to many users | Subscription-based, enterprise pricing varies |

| Power BI with Azure AI | Predictive models, anomaly detection, NLP | Moderate — seamless integration with Microsoft Ecosystem | Pay-as-you-go or enterprise licensing |

| Qlik Sense with Insight Advisor | AI-driven exploration, automatic visualization suggestions | High — designed for self-service analytics | Subscription model, pricing depends on deployment scale |

| IBM Cognos Analytics | Automated visual generation, conversational interfaces, smart data prep | Moderate — requires some training for advanced features | Licensed software with flexible plans |

This comparison aims to clarify the strengths and considerations associated with each platform, enabling organizations to select solutions aligned with their technical expertise, budget constraints, and analytical objectives.

Best Practices for Implementing AI-Enhanced Data Visualizations

Integrating AI into data visualization workflows offers significant advantages in terms of efficiency, insightfulness, and user engagement. However, successful implementation requires adherence to established best practices that ensure accuracy, reliability, and transparency. Proper guidelines for selecting AI models, validating outputs, and maintaining interpretability are essential to harness the full potential of AI-driven visualizations while mitigating risks associated with bias or misinterpretation.

Following structured best practices facilitates the creation of trustworthy and effective visualizations that support data-driven decision-making. This section provides comprehensive guidelines to help practitioners optimize AI integration in their visualization projects, ensuring these tools serve as reliable and transparent aids for understanding complex datasets.

Selecting Appropriate AI Models for Specific Visualization Needs

Choosing the right AI models aligns with the specific objectives and characteristics of a data visualization project. Different models excel at various tasks, including pattern recognition, clustering, anomaly detection, or predictive analytics. An informed selection process involves understanding the dataset’s nature, the type of insights required, and the computational resources available.

- Assess Data Characteristics: Evaluate data volume, dimensionality, and quality to determine whether models like deep learning, decision trees, or clustering algorithms are most suitable.

- Define Visualization Goals: Clarify whether the goal is to identify trends, segment data, or predict future outcomes, guiding the choice of models such as neural networks, k-means clustering, or regression models.

- Consider Model Explainability: For visualizations that support decision-making, prioritize models that offer interpretability, such as decision trees or linear regression, over more opaque models like deep neural networks.

- Evaluate Computational Constraints: Select models that balance complexity with processing capabilities to ensure timely and scalable visualization outputs.

Effective model selection combines an understanding of both data characteristics and visualization objectives, ensuring AI enhances rather than complicates the interpretability of visual insights.

Creating Checklists for Validating the Accuracy and Reliability of AI-Driven Visual Outputs

Validation is a critical step to guarantee that AI-enhanced visualizations accurately represent underlying data and do not introduce misleading artifacts. Systematic checklists help in consistently assessing the quality and trustworthiness of AI-driven outputs across different projects.

Key validation points include:

- Data Quality Verification: Confirm that the input data is accurate, complete, and free of biases that could skew AI outputs.

- Model Performance Evaluation: Use metrics such as accuracy, precision, recall, or F1-score relevant to the task to assess model effectiveness before visualization deployment.

- Cross-Validation: Implement techniques like k-fold cross-validation to test model stability across different data subsets.

- Visual Consistency Checks: Ensure that visualizations reflect true data patterns without distortions or anomalies introduced by the model.

- Expert Review: Incorporate domain experts to interpret the visual outputs, confirming that insights align with domain knowledge and expectations.

Regular validation checkpoints act as safeguards, ensuring AI-driven visualizations maintain integrity and provide dependable insights for decision-makers.

Procedures for Maintaining Transparency and Interpretability in AI-Enhanced Visualizations

Transparency and interpretability are foundational for building trust in AI-powered visualizations, especially when used in critical decision-making contexts. Implementing systematic procedures ensures that end-users understand how AI models contribute to the visual insights and can critically assess their validity.

Key procedures include:

- Model Documentation: Maintain detailed records of the AI models used, including their training data, parameters, and decision criteria, to facilitate traceability.

- Feature Importance Analysis: Use techniques such as SHAP values or feature importance scores to illustrate which data attributes influence model outputs, enhancing interpretability.

- Simplification of Complex Models: Where possible, opt for simpler models or apply techniques like model pruning to make AI contributions more understandable.

- Visualization of Model Processes: Incorporate visuals that depict how data flows through models and leads to specific visual outcomes.

- User Education and Communication: Provide training, tooltips, or documentation that explain the role of AI in the visualization, ensuring users grasp how insights are generated.

Prioritizing transparency and interpretability fosters user confidence, encourages critical engagement, and promotes ethical AI practices within data visualization initiatives.

Case Studies and Examples of AI-Enhanced Data Visualization

Real-world examples demonstrate the impactful integration of artificial intelligence in transforming data visualization practices. These case studies showcase how AI techniques can be leveraged to generate more insightful, interactive, and predictive visualizations across diverse industries. Analyzing these projects provides valuable insights into successful strategies, potential pitfalls, and lessons learned, guiding future implementations toward more effective data storytelling and decision-making.

Below is a summary of notable case studies illustrating the diverse applications of AI in data visualization projects. The table organizes key aspects such as the project scope, AI techniques employed, outcomes achieved, and challenges encountered, offering a comprehensive view of practical experiences in this domain.

Summary of AI-Enhanced Data Visualization Case Studies

| Project | AI Techniques Used | Outcomes | Challenges |

|---|---|---|---|

| Customer Churn Prediction Dashboard for Telecom | Machine Learning Models, Clustering, Natural Language Processing (NLP) | Enhanced predictive accuracy for customer attrition; interactive dashboards allowing segmentation analysis | Data quality issues, model interpretability, real-time data processing constraints |

| Financial Market Visualization Platform | Deep Learning, Time Series Forecasting, Anomaly Detection | Accurate trend forecasting; early detection of market anomalies; dynamic visualizations with predictive overlays | Complexity of models, high computational requirements, market data volatility |

| Healthcare Data Dashboard for Disease Spread | Geospatial AI, Clustering Algorithms, Predictive Modeling | Visualization of disease hotspots; prediction of future outbreaks; interactive maps for policymakers | Privacy concerns, data sparsity in certain regions, integrating heterogeneous data sources |

| Supply Chain Optimization Visualization | Reinforcement Learning, Predictive Analytics, Scenario Simulation | Optimized logistics routes; scenario-based visualizations allowing proactive planning | Complex model tuning, integration with existing systems, interpretability of AI recommendations |

| Retail Sales Analysis Dashboard | AI-driven Segmentation, Sales Forecasting, Customer Behavior Modeling | Personalized marketing insights; sales trend predictions; interactive visualizations for store managers | Data privacy issues, ensuring model fairness, maintaining user-friendly interfaces |

Lessons learned from these initiatives emphasize the importance of high-quality, clean data to maximize AI effectiveness, the need for transparency and interpretability of AI models to foster user trust, and the value of iterative testing and user feedback in refining visualizations. Common pitfalls include over-reliance on complex models without adequate explanation, neglecting data privacy considerations, and underestimating computational demands. Successful projects balance advanced AI techniques with usability, ethical standards, and clear communication, ensuring that visualizations not only are data-rich but also support actionable insights for diverse stakeholders.

Last Point

Incorporating AI into data visualization opens up new horizons for clarity, interactivity, and analytical depth. By applying these innovative techniques and tools, organizations can significantly improve their data storytelling capabilities, leading to more impactful insights and smarter decision-making processes. Embrace these advancements to stay ahead in the data-driven landscape.