Learning how to automate data visualization with AI opens new horizons for streamlining complex data analysis and presentation. This approach leverages advanced technologies to transform raw data into meaningful visual insights with minimal manual intervention, enhancing both efficiency and accuracy.

By integrating AI-driven automation into visualization workflows, organizations can generate dynamic, interactive, and real-time dashboards that facilitate better decision-making. Exploring the key tools, techniques, and challenges involved provides a comprehensive understanding of this innovative field.

Overview of automating data visualization with AI

Automating data visualization with artificial intelligence (AI) represents a transformative approach to interpreting vast and complex datasets efficiently. By leveraging AI-driven tools and algorithms, organizations can generate insightful visual representations of data with minimal manual intervention, enabling faster decision-making and more dynamic reporting processes.

This integration of AI into data visualization workflows simplifies the process of identifying patterns, anomalies, and trends within data, making it accessible even to those without extensive technical expertise. The automation also facilitates continuous updates and real-time visualizations, enhancing responsiveness to changing data environments.

Benefits of integrating AI into data visualization workflows

Incorporating AI into data visualization offers numerous advantages that significantly enhance analytical capabilities and operational efficiency. AI-powered automation reduces the time and effort required to prepare and visualize data, allowing analysts to focus on deriving insights rather than managing technical procedures.

Furthermore, AI can handle complex and large-scale datasets, uncover hidden correlations, and generate adaptive visualizations tailored to specific user needs. This adaptability ensures that visual outputs are relevant and easily interpretable, fostering better communication across teams and stakeholders.

Another key benefit is the ability to automate repetitive tasks such as data cleaning, transformation, and updating visualizations continuously, which minimizes human error and ensures data consistency. Ultimately, AI-driven visualization promotes more data-informed decision-making in a timely manner.

Comparison of traditional vs. AI-automated visualization methods

Understanding the differences between traditional and AI-automated data visualization approaches highlights the efficiency gains and capabilities introduced through AI integration. The table below summarizes key aspects of both methods:

| Aspect | Traditional Data Visualization | AI-Automated Data Visualization |

|---|---|---|

| Data Preparation | Manual data cleaning and transformation by analysts | Automated data cleaning, transformation, and integration using AI algorithms |

| Visualization Generation | Manual creation based on analyst expertise and static tools | Dynamic generation with AI selecting optimal visualization types based on data patterns |

| Update Frequency | Periodic, often requiring manual refresh | Real-time or scheduled automatic updates |

| Complex Data Handling | Limited; manual intervention needed for complex datasets | High; AI algorithms can analyze large, multidimensional datasets efficiently |

| User Involvement | High; significant manual effort and expertise required | Lower; AI automates most processes, allowing users to focus on insights |

In summary, while traditional visualization methods rely heavily on manual input and static processes, AI-driven automation introduces agility, scalability, and intelligence to the data visualization process. This evolution empowers organizations to leverage data more effectively and respond swiftly to emerging insights and trends.

Key AI Technologies and Tools for Data Visualization Automation

Automating data visualization through AI involves leveraging a suite of advanced algorithms and specialized platforms designed to transform raw data into insightful visual representations efficiently. These technologies enable analysts and organizations to generate complex visualizations with minimal manual effort, enhancing decision-making processes and operational agility.

By integrating AI-driven tools into data workflows, users can automate repetitive tasks, uncover hidden patterns, and produce dynamic visualizations that adapt to changing datasets. Understanding the core AI algorithms and the leading platforms that facilitate these capabilities is essential for organizations aiming to harness the full potential of automated data visualization.

Prominent AI Algorithms Used to Generate Visualizations Automatically

Several AI algorithms underpin the automation of data visualization, each tailored to specific types of data and analytical goals. These algorithms analyze data, identify salient features, and generate visual representations that best convey the underlying insights.

- Clustering Algorithms (e.g., K-Means, DBSCAN): These algorithms group similar data points, which can then be visualized to reveal inherent data segments or clusters within a dataset. For example, customer segmentation in marketing relies heavily on clustering to identify distinct consumer groups.

- Dimensionality Reduction Techniques (e.g., PCA, t-SNE, UMAP): These methods reduce high-dimensional data into two or three dimensions, facilitating effective visualization. They are commonly used in genomics, image recognition, and text analysis to visualize complex data structures.

- Generative Models (e.g., GANs, Variational Autoencoders): Generative AI models can create synthetic data visualizations that help in understanding potential data distributions or augmenting limited datasets. They are increasingly used in creative data storytelling and simulation scenarios.

- Neural Networks: Deep learning models, especially convolutional neural networks (CNNs) and recurrent neural networks (RNNs), are employed to generate sophisticated visualizations such as heatmaps, attention maps, and predictive trend lines from complex datasets.

Popular Platforms and Software Facilitating AI-Based Visualization

To streamline the integration of AI into data visualization workflows, several platforms and software solutions offer powerful, user-friendly interfaces and automation capabilities. These tools vary in complexity and specialization but share the common goal of simplifying data analysis through AI.

- Tableau with Einstein Analytics: Combines Tableau’s visualization capabilities with Salesforce’s Einstein AI, enabling predictive analytics and automated visualization generation based on user data.

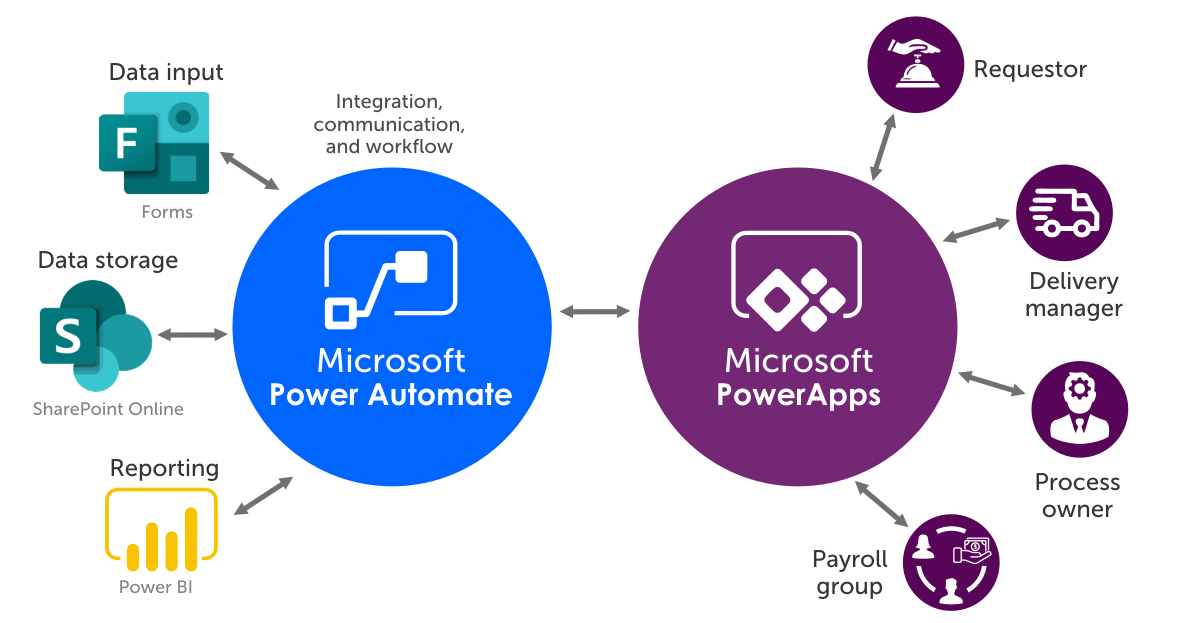

- Microsoft Power BI with Azure Machine Learning: Integrates Power BI’s visualization tools with Azure’s AI services, allowing users to embed predictive models directly into dashboards for real-time insights.

- Google Data Studio with Vertex AI: Leverages Google’s cloud-based AI platform to automate data processing and generate interactive visualizations, especially suited for large-scale data environments.

- Qlik Sense with Qlik Cognitive Engine: Incorporates AI-driven insights and automatic visualization suggestions, improving the efficiency of data exploration and reporting.

- IBM Cognos Analytics: Features AI-assisted data preparation, pattern recognition, and visualization recommendations, facilitating end-to-end automation in enterprise data environments.

Integration of AI Tools into Existing Data Analysis Pipelines

Effective integration of AI tools within data analysis workflows involves several stages, ensuring seamless data flow and insight generation. Visualizing this integration as a flowchart underscores the systematic approach needed for successful automation.

Data Collection & Preprocessing → AI Model Selection & Training → Visualization Generation → Insights & Reporting

The initial phase involves gathering and cleaning raw data, which is then fed into AI models such as clustering or dimensionality reduction algorithms. The trained AI models automatically generate visualizations, which are refined and incorporated into dashboards or reports. This integrated pipeline enhances real-time decision-making by providing continuously updated visual insights, reducing manual intervention, and enabling scalable analytics across diverse data sources.

Data preparation and preprocessing for AI-enabled visualization

Effective data visualization powered by AI hinges on the quality and readiness of the underlying datasets. Preparing and preprocessing data ensures that algorithms can accurately interpret and generate meaningful visual insights. This stage is crucial for transforming raw, often messy data into a structured format suitable for sophisticated AI-driven visualization techniques.

Proper data preparation involves cleaning, formatting, selecting relevant features, and augmenting datasets to improve the accuracy and richness of visual outputs. Implementing systematic procedures for these tasks enhances the overall effectiveness of AI-enabled visualization systems and facilitates clearer, more insightful data storytelling.

Cleaning and formatting data suitable for AI algorithms

Cleaning and formatting data is foundational in ensuring that AI algorithms can process information efficiently and accurately. Raw data often contains inconsistencies, missing values, duplicates, and irrelevant entries that can distort visualization outcomes if not addressed properly. Proper cleaning and formatting mitigate these issues, leading to more reliable and insightful visualizations.

- Identify and handle missing data through imputation techniques such as mean, median, or mode substitution, or by using advanced methods like k-Nearest Neighbors (k-NN) or regression-based imputation.

- Remove duplicates and inconsistent entries to prevent skewed analysis, ensuring each data point is unique and accurate.

- Normalize or standardize data to bring all features onto a comparable scale, especially important for algorithms sensitive to magnitude differences, such as neural networks or clustering models.

- Convert categorical variables into numerical format using encoding techniques like one-hot encoding, label encoding, or target encoding, enabling algorithms to interpret these features effectively.

- Ensure proper formatting of date and time fields, which may require parsing, standardization, and timezone adjustments to facilitate temporal analysis and visualizations.

Effective cleaning and formatting create a robust foundation for subsequent feature selection and augmentation, directly impacting the precision of AI-driven visual insights.

Techniques for feature selection and data augmentation to enhance visualization accuracy

Feature selection and data augmentation are strategic processes that significantly improve the performance and interpretability of AI models used in data visualization. Selecting the most relevant features reduces noise and computational complexity, while augmentation enriches data diversity, aiding in more comprehensive visual analysis.

Feature selection involves identifying variables that contribute most to the target outcome or visualization objective. Techniques such as correlation analysis, mutual information, Recursive Feature Elimination (RFE), and model-based importance measures help streamline datasets for better visualization clarity.

Data augmentation involves artificially increasing the dataset’s size and variability, which is particularly valuable when data is limited. Methods include adding noise, synthesizing new data points via techniques like SMOTE (Synthetic Minority Over-sampling Technique), or applying transformations such as rotation, scaling, and flipping in image data to improve model robustness.

Both processes support the development of more precise, relevant, and visually compelling insights by enabling AI algorithms to learn from enriched and well-curated data, ultimately fostering more accurate and insightful visualizations.

Optimized feature selection coupled with strategic data augmentation enhances the interpretability and depth of AI-generated visual insights, making complex data more accessible and actionable.

Step-by-step checklist for preparing datasets for AI-driven visualization

Adopting a systematic approach to data preparation ensures consistency and effectiveness in AI-enabled visualization workflows. The following checklist guides the essential steps to prepare datasets optimally:

- Acquire raw data from reliable sources, ensuring data relevance and accuracy.

- Conduct initial exploratory data analysis (EDA) to understand data structure, distributions, and anomalies.

- Clean data by addressing missing values, removing duplicates, and correcting inconsistencies.

- Format data appropriately, standardizing date, time, and categorical variables for compatibility with AI models.

- Normalize or standardize numerical features to ensure uniform scale.

- Encode categorical variables using suitable encoding techniques.

- Perform feature selection by analyzing feature importance and removing redundant or irrelevant variables.

- Augment data where necessary, applying techniques such as synthetic data generation or transformations to improve model training.

- Partition data into training, validation, and testing sets, maintaining balanced distributions for model evaluation.

- Document preprocessing steps thoroughly to ensure reproducibility and facilitate future updates or adjustments.

Following this comprehensive checklist equips data practitioners with a structured approach to prepare high-quality datasets, ultimately enhancing the accuracy and clarity of AI-powered visualizations.

Automating Visualization Generation with AI

Automating the creation of data visualizations through AI significantly enhances efficiency and enables dynamic, real-time insights. By leveraging advanced algorithms and automation tools, organizations can generate impactful visual representations directly from raw data, reducing manual effort and minimizing errors. This approach not only accelerates the reporting process but also ensures consistency and scalability across multiple datasets and use cases.

Implementing AI-driven visualization generation involves the development of scripts and models that interpret raw data, determine the most suitable visualization types, and produce customized visual outputs aligned with specific presentation needs. This process often integrates machine learning techniques to optimize visualization selection, ensuring that insights are conveyed clearly and effectively to stakeholders. The following sections detail how to accomplish these tasks effectively.

Creating Scripts for Visualization from Raw Data

Developing scripts to automate visualization begins with scripting languages such as Python or R, which offer extensive libraries for data manipulation and visualization. These scripts typically ingest raw datasets, perform necessary data transformations, and then generate visual outputs with minimal manual intervention.

Key steps in scripting include:

- Data Extraction: Import raw data from various sources such as databases, CSV files, or APIs using libraries like Pandas (Python) or data.table (R).

- Data Cleaning and Preprocessing: Handle missing values, normalize data, and perform feature engineering to prepare the dataset for visualization.

- Visualization Logic: Define visualization parameters, such as chart types, axes, labels, and color schemes, with libraries like Matplotlib, Seaborn, Plotly, or ggplot2.

- Automation: Encapsulate the process within functions or scripts that can process multiple datasets or update visualizations dynamically.

For example, a Python script can automatically generate a bar chart of sales data by reading a CSV file, aggregating sales figures, and plotting the results with appropriate labels and colors, all in a single run.

Configuring Machine Learning Models to Select Optimal Visualization Types

Machine learning models can be trained to analyze dataset characteristics and recommend the most suitable visualization formats. This automation ensures that data is presented in an intuitive and insightful manner, tailored to the specific structure and nature of each dataset.

Important considerations include:

- Feature Extraction: Extract features such as data distribution, variance, correlation coefficients, and data types to inform visualization choices.

- Model Selection: Employ classification algorithms—like decision trees or random forests—that predict the best visualization type based on these features.

- Training Data: Use labeled datasets where the optimal visualization for various data profiles has been pre-identified to train the models effectively.

- Model Deployment: Integrate the trained models into visualization pipelines, allowing automated recommendations during data analysis workflows.

For instance, a model might analyze a new dataset with high correlation and suggest a scatter plot to visualize relationships, while recommending a pie chart for categorical distribution data.

Customizing AI Outputs to Specific Presentation Needs

While AI algorithms can automate visualization processes, customization remains crucial to meet specific audience and presentation requirements. Fine-tuning AI-generated outputs ensures clarity, branding consistency, and relevance to the context.

Methods for customization include:

- Template Selection: Use predefined templates that align with organizational branding and presentation standards, enabling the AI to select or modify visualizations accordingly.

- Parameter Tuning: Adjust visualization parameters such as color palettes, font sizes, and labels to enhance readability and aesthetic appeal.

- Interactive Features: Incorporate interactive elements like tooltips, filters, and zoom functions to facilitate deeper data exploration within AI-generated visualizations.

- Feedback Loops: Implement mechanisms for users to provide feedback on AI outputs, allowing the system to learn and improve customization over time.

For example, an AI system might generate a timeline visualization for financial data, which can then be customized with the company’s color scheme, adjusted for specific timeframes, and enhanced with annotations to highlight key events, ensuring the final output aligns with presentation goals.

Techniques for AI-powered Dynamic and Interactive Visualizations

Implementing real-time, interactive data visualizations powered by AI significantly enhances user engagement and decision-making capabilities. These techniques enable dashboards and visual interfaces to adapt dynamically to incoming data streams and provide users with immersive, customizable experiences. By leveraging advanced AI algorithms and interactive frameworks, organizations can transform static reports into lively, insightful tools that respond instantaneously to user inputs and evolving data landscapes.

Developing such visualizations involves integrating AI-driven data processing, real-time data updating mechanisms, and interactivity features that allow users to explore data intuitively. These approaches facilitate more profound insights, foster user engagement, and support timely decision-making in fast-paced environments such as financial trading, network monitoring, or customer analytics.

Developing Real-time Updating Dashboards Using AI

Creating dashboards that update in real-time with AI integration requires combining streaming data ingestion, AI-based anomaly detection, and adaptive visualization components. AI models can analyze incoming data streams to identify patterns, forecast trends, or flag anomalies instantly, thereby ensuring the visualization remains relevant and accurate. Technologies such as Apache Kafka or MQTT enable seamless data streaming, while AI frameworks like TensorFlow or PyTorch can process and interpret this data for visualization updates.

For example, in financial markets, AI-powered trading dashboards can continuously ingest live market data, perform sentiment analysis on news feeds, and display updated stock performance indicators with minimal latency. The system dynamically highlights significant changes or anomalies, empowering traders with timely insights that inform swift decisions.

Adding Interactivity Through AI-enhanced Features

Interactivity is elevated through AI-enhanced features that predict user intentions, personalize visualizations, and enable intuitive data exploration. Techniques such as natural language processing (NLP) allow users to query data using conversational language, which AI interprets to dynamically adjust visualizations. Additionally, machine learning models can recommend relevant filters, views, or insights based on user behavior, thus creating a more personalized experience.

For example, in a sales dashboard, AI can suggest which regions or products require attention based on historical interactions, automatically highlighting key data points. Interactive elements like AI-powered sliders or gesture controls can also allow users to drill down into specific data subsets, fostering a deeper understanding of complex datasets.

Embedding AI Insights into Visualizations for Deeper User Engagement

Embedding AI insights directly into visualizations involves integrating predictive analytics, sentiment analysis, or models alongside traditional charts and graphs. This approach provides users with contextual insights that deepen comprehension and support strategic actions. Visual cues such as annotations, color coding, or embedded text can highlight AI-derived insights, making complex data patterns more accessible.

“AI insights embedded within visualizations transform passive data viewing into an active, analytical experience, empowering users to uncover hidden trends and make informed decisions.”

For instance, a customer service analytics dashboard might display sentiment trends with AI-generated explanations or forecasted customer satisfaction scores. These insights guide users in identifying critical issues or opportunities without requiring extensive data analysis skills, thereby democratizing data-driven decision-making.

Challenges and limitations of AI-driven automation in data visualization

While AI-powered automation has significantly enhanced the efficiency and sophistication of data visualization processes, it is essential to recognize the inherent challenges and limitations that come with relying on artificial intelligence for such tasks. Understanding these issues is crucial for developing effective strategies to mitigate potential pitfalls and ensure that visualizations remain accurate, reliable, and meaningful.

Implementing AI in data visualization introduces complexities related to data quality, algorithmic biases, interpretability, and control over the visualization outcomes. These challenges can impact the overall trustworthiness of automated visualizations and influence decision-making processes dependent on them. Addressing these issues involves a comprehensive understanding of the limitations and proactive strategies to overcome them.

Common issues encountered in AI-driven data visualization automation

Automating data visualization with AI often involves several technical and conceptual difficulties. Key issues include:

- Data quality and consistency: AI models rely heavily on the quality and completeness of the input data. Noisy, incomplete, or outdated data can lead to misleading visualizations, reducing their usefulness.

- Biases in AI algorithms: Pre-existing biases in training data can cause AI models to generate skewed or biased visual outputs, which may reinforce inaccurate assumptions or stereotypes.

- Interpretability and transparency: Complex AI models, such as deep learning algorithms, often operate as “black boxes,” making it difficult to understand how specific visualizations are generated or to verify their correctness.

- Limited contextual understanding: While AI excels at pattern detection, it may lack the contextual awareness that human analysts bring, potentially leading to misrepresentations or oversights in visualizations.

- Scalability and computational resources: Large-scale data and sophisticated models demand significant computational power, which can limit real-time automation or increase costs.

Strategies to mitigate inaccuracies and biases in AI-generated visuals

To ensure the reliability of AI-driven visualizations, organizations should adopt comprehensive mitigation strategies:

- Data validation and cleaning: Implement rigorous data preprocessing workflows to identify and correct errors, inconsistencies, and outliers before feeding data into AI models.

- Bias detection and correction: Regularly audit training datasets for potential biases and incorporate fairness-aware algorithms. Augment datasets with diverse, representative data to reduce skewed outputs.

- Model transparency and explainability: Utilize interpretable AI techniques and tools that provide insight into how visualizations are generated, helping analysts verify and trust the outputs.

- Human-in-the-loop processes: Combine AI automation with expert oversight, allowing human analysts to review, adjust, or veto AI-generated visualizations when necessary.

- Continuous monitoring and feedback: Establish feedback mechanisms where users can flag inaccuracies or biases, enabling iterative improvements of the AI models and visualization outputs.

Comparison of manual and AI-automated approaches in terms of reliability and control

Evaluating manual versus AI-driven data visualization approaches involves balancing reliability, control, and efficiency. Each method offers distinct advantages and limitations:

| Aspect | Manual Visualization | AI-Automated Visualization |

|---|---|---|

| Reliability | High, as visualizations are crafted with human judgment, allowing nuanced interpretation and quality control. | Variable; depends on data quality, algorithm robustness, and implementation. AI can produce accurate visuals but is susceptible to errors if not properly managed. |

| Control | Complete, with analysts able to tailor and adjust visualizations based on context and expertise. | Limited; automation can generate visualizations rapidly but may lack flexibility or context-specific adjustments without explicit programming or oversight. |

| Scalability | Limited; manual processes are time-consuming and less feasible for large or dynamic datasets. | High; AI systems can handle vast datasets efficiently and produce visualizations in real-time, supporting large-scale operations. |

| Consistency | Dependent on analyst expertise; consistency may vary. | Potentially high, provided models are well-trained and standardized, ensuring uniformity across visual outputs. |

While manual visualizations offer greater control and interpretability, they are less scalable and more resource-intensive. AI-driven approaches excel in handling large volumes of data quickly and consistently but require careful management to ensure accuracy and trustworthiness. An optimal strategy often involves a hybrid approach, leveraging AI automation for efficiency while maintaining human oversight for quality assurance and nuanced interpretation.

Case Studies and Practical Implementations

Examining real-world examples of organizations deploying AI-driven data visualization reveals valuable insights into effective strategies, tool selection, and achieved outcomes. These case studies demonstrate how diverse industries leverage automation to enhance clarity, efficiency, and decision-making processes, providing a roadmap for similar initiatives.

Through detailed analysis of these implementations, it becomes evident that successful automation hinges on choosing appropriate AI techniques, integrating robust data pipelines, and aligning visualization objectives with organizational goals. The following case studies illustrate a range of approaches and lessons learned from practical deployments across different sectors.

Financial Services Sector: Risk Assessment and Portfolio Visualization

In the financial industry, a leading investment bank integrated AI-powered visualization tools to monitor risk exposure and portfolio performance in real-time. The organization employed machine learning models such as clustering algorithms to categorize assets and neural networks for predictive analytics.

Steps Taken:

- Data aggregation from multiple sources including market feeds, transaction records, and macroeconomic indicators.

- Data preprocessing involving normalization and outlier detection to ensure quality input for AI models.

- Implementation of AI algorithms to identify risk clusters and forecast potential losses under various market scenarios.

- Deployment of automated visualization dashboards that dynamically update with new data and AI insights.

Tools Used:

- Python libraries such as TensorFlow and Scikit-Learn for AI modeling.

- Tableau and Power BI enhanced with AI integration for visualization automation.

Outcomes Achieved:

- Faster identification of high-risk asset clusters, reducing manual analysis time by 50%.

- Enhanced decision-making through real-time, AI-driven visual insights.

- Improved risk management strategies aligned with predictive analytics results.

Healthcare Industry: Patient Data Monitoring and Outcome Visualization

A healthcare provider employed AI-based visualization to track patient health data and predict treatment outcomes. The system utilized natural language processing (NLP) to analyze unstructured clinical notes and machine learning models for outcome prediction.

Steps Taken:

- Data collection from electronic health records (EHRs), wearable devices, and lab reports.

- Data preprocessing involving anonymization, missing value imputation, and feature extraction from clinical notes.

- Training of AI models such as random forests and deep learning networks to assess patient risk levels and forecast recovery probabilities.

- Automated generation of interactive dashboards displaying patient trajectories, risk scores, and predictive insights.

Tools Used:

- Python with SpaCy and TensorFlow for NLP and deep learning.

- QlikView for developing dynamic, AI-powered visualizations.

Outcomes Achieved:

- Improved patient monitoring with proactive alerts based on AI predictions.

- Enhanced clinical decision-making with clear, interactive visual summaries.

- Reduced manual reporting efforts, saving approximately 30% of clinical staff time.

Retail Industry: Customer Behavior Analysis and Sales Visualization

A major retail chain adopted AI-driven visualization to analyze customer purchasing patterns and optimize inventory management. The process involved employing clustering and association rule mining algorithms to uncover hidden customer segments and product affinities.

Steps Taken:

- Data integration from point-of-sale systems, loyalty programs, and online transactions.

- Preprocessing to clean, categorize, and anonymize customer data while handling missing entries.

- Application of AI techniques to segment customers and identify cross-selling opportunities.

- Automation of dashboards that display real-time sales trends, customer segments, and product recommendations.

Tools Used:

- Apache Spark for data processing at scale.

- R and Python for AI modeling, integrated with Tableau for visualization automation.

Outcomes Achieved:

- Increased sales through targeted marketing campaigns driven by AI insights.

- Optimized stock levels, reducing overstock and stockouts by 20%.

- Enhanced customer engagement with personalized visualized recommendations.

Summary of Case Studies

| Industry | AI Techniques Employed | Results | Lessons Learned |

|---|---|---|---|

| Financial Services | Clustering, Neural Networks | Real-time risk visualization, faster decision-making | Importance of data quality and seamless integration of AI with visualization tools |

| Healthcare | NLP, Random Forests, Deep Learning | Enhanced patient monitoring, reduced manual reporting | Handling unstructured data is critical for comprehensive insights |

| Retail | Clustering, Association Rule Mining | Optimized inventory, increased sales, personalized marketing | Data privacy and customer segmentation strategies are vital for success |

Future Trends and Innovations in AI for Data Visualization Automation

The landscape of AI-driven data visualization continues to evolve rapidly, driven by emerging technologies and innovative approaches. Staying abreast of these developments is crucial for organizations and professionals aiming to leverage automation for insightful and dynamic data storytelling. This section explores the upcoming trends, predicted advancements, and effective strategies to remain informed in this dynamic field.

Emerging AI Technologies Influencing Visualization Automation

Advancements in AI are propelling the capabilities and sophistication of data visualization tools. Key emerging technologies include:

- Generative AI Models: Models such as GPT-4 and DALL·E are expanding beyond text and images to generate complex visualizations and narratives, enabling more personalized and context-aware visual storytelling.

- Explainable AI (XAI): As data visualizations become more automated, the need for transparency increases. XAI techniques facilitate understandable insights, helping users interpret AI-generated visualizations with confidence.

- Edge AI: Processing data closer to its source reduces latency and enables real-time visualization updates, crucial for IoT applications and live dashboards.

- Hybrid AI Systems: Combining symbolic reasoning with deep learning enhances the reasoning capabilities of visualization tools, allowing for more accurate and contextually relevant visual outputs.

- Meta-Learning and Few-Shot Learning: These techniques enable AI systems to adapt quickly to new visualization tasks with minimal data, increasing flexibility and reducing training time.

Predictions for the Evolution of AI-Driven Data Storytelling

The future of AI in data storytelling is poised for significant transformation, characterized by increased automation, personalization, and interactivity. Expected developments include:

- Highly Personalized Visual Narratives: AI will tailor data stories based on user preferences, expertise level, and contextual needs, making insights more accessible and engaging for diverse audiences.

- Automated Multi-Modal Storytelling: Integration of text, visuals, and audio will enable richer, multi-sensory data narratives that communicate insights more effectively across different platforms and user environments.

- Predictive and Prescriptive Visualization: AI will not only visualize historical data but also generate forecasts and recommendations, transforming visualizations into proactive decision-making tools.

- Real-Time Adaptive Visualizations: Dynamic visualization systems will adapt instantly to streaming data, providing up-to-the-minute insights, essential for areas like finance, healthcare, and operational monitoring.

- Enhanced Collaboration Features: AI-powered visualization platforms will facilitate collaborative storytelling, enabling teams to co-create, annotate, and interpret data visualizations seamlessly across geographies.

Strategies to Stay Updated with Advancements in AI-Driven Data Visualization

Remaining informed about the latest trends and innovations requires proactive engagement with multiple resources and communities. Effective methods include:

- Engaging with Academic and Industry Publications: Regularly reading journals such as the Journal of Data Science and industry whitepapers from leading AI and data visualization companies provides insights into cutting-edge research and applications.

- Participating in Conferences and Webinars: Events like NeurIPS, SIGGRAPH, and the IEEE VIS Conference present opportunities to learn from experts, showcase new tools, and network with innovators.

- Following Thought Leaders and Organizations: Subscribing to updates from AI research labs, technology firms like Google AI, OpenAI, and Tableau can keep professionals informed about new tools and breakthroughs.

- Engaging in Online Communities and Forums: Platforms such as GitHub, Stack Overflow, and LinkedIn groups facilitate knowledge exchange, troubleshooting, and discussions on emerging trends.

- Experimenting with New Tools: Hands-on experience with beta versions, open-source projects, and sandbox environments accelerates learning and understanding of evolving capabilities.

“Staying ahead in AI-driven data visualization demands continuous learning, active engagement with innovations, and practical application of emerging technologies to transform data storytelling.”

Final Thoughts

In summary, mastering how to automate data visualization with AI empowers users to produce faster, more accurate, and engaging visual insights. As technology evolves, staying informed about emerging trends and tools will be essential for leveraging AI’s full potential in data storytelling and analysis.